"You can't improve what you can't measure"

So everything gets measured.

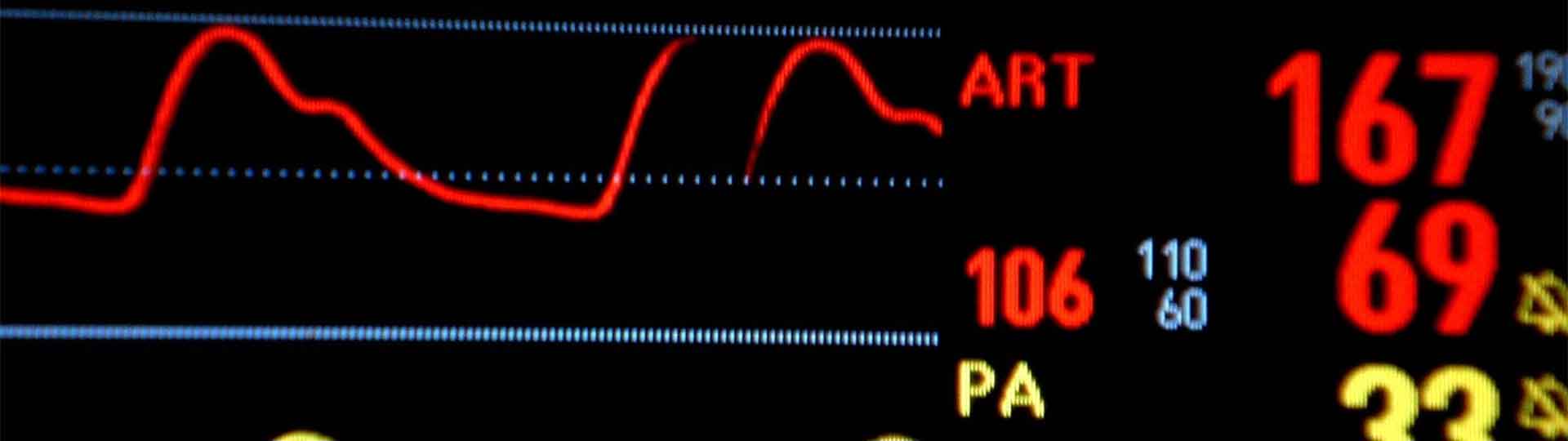

Take blood pressure. High blood pressure, known as hypertension, is called the "silent killer". It can wreak all sorts of damage to the body if not managed.

We know that high blood pressure is bad, but what does a blood pressure measurement really tell us about our health? Does a normal measurement mean you're healthy?

While the average blood pressure has stayed pretty steady since 1975, there's still reasonable disagreement about what you should do when your blood pressure isn't average. After all, genetics affect blood pressure. Blood pressure can vary by the time of day. And for some, just measuring blood pressure triggers a stress response causing it to increase. Trends, variance, and context all matter when understanding what blood pressure reveals about your health.

Still, the science is pretty clear: the closer your blood pressure is to the normal range, the better off you're statistically likely to be.

So normal blood pressure becomes the goal, and that's when things can go sideways. Blood pressure can become a false indicator of health. It can cause other dimensions of cardiovascular health to be ignored. It can lead to overmedication. Despite your intentions, your blood pressure goals might do more harm than good.

This phenomena is common enough there's even a name for it: Goodhart's law. Goodhart's law posits when a measure becomes a target, it ceases to be a good measure. Goals become subject to bias, corruption, and misunderstanding.

Throughout all manner of work and all walks of life (and especially in software), you'll find Goodhart's law rearing its ugly head. It can trip up the newbie, the experienced pro, and the pointy-headed guru alike.

Among a million different metrics software teams might capture, one of the most commonly captured is code coverage. Code coverage is a measure that tells you what code is exercised when testing. It can be evaluated at the project level, module level, class level, function level, and even individual lines of code. There are great tools that collect code coverage data and if you've got automated tests that run when you build, you may be able to collect code coverage data every time a developer commits. Powerful stuff.

And when you see what's covered and what's not, it's reasonable to want better code coverage. Because if we have good code coverage, we must have thorough testing. And if we have thorough testing, we must be building quality software. Right? So goals emerge: cover more, new commits must have tests that provide coverage, and so on.

The danger is obvious - it's easy to create tests that cover a lot of code but that don't actually test anything. And that means if the goal is code coverage instead of well-tested software, corruption creeps in.

That doesn't mean code coverage isn't incredibly useful. While it's dangerous to use code coverage to make affirmations about your software quality, it provides universal benefit in telling you what's not being tested. Maybe your tests suck. Maybe they are great. Either way, code that isn't covered is code that isn't tested.

So we know code coverage can be valuable when used appropriately, but what about metrics like project size, complexity, velocity, bug counts, features delivered, test counts, build failures, customer complaints, and all the other ways we try to score our software? All of that data makes for pretty charts and dashboards, but does it tell us whether our software is any good?

It can, but not exactly.

Quality is ultimately subjective. A team making software for critical medical devices, for example, is going to have a different bar for quality than a team making software that makes fart noises. And that subjectivity is the lens through which all those metrics we capture are evaluated.

Like with blood pressure, your software metrics must be examined in context. You have to look at trends. You have to consider variance. And you have to resist the bias, corruption, and misunderstanding that will inevitably pollute your data.

If your team is not actively measuring code coverage or one of these other metrics, don't panic. You're in good company. Lots of teams, including some really good ones, don't have magic dashboards full of metrics and charts and graphs. And many of the teams that do don't interpret what they have effectively or constructively.

But measuring your software development effort and interpreting those results intelligently can help make you better. Just always be skeptical of what that data is telling you and especially skeptical of any measure that (deliberately or accidentally) turns into a goal.